Normalization And Scaling In Neural Networks

Hello everybody.

I'm passing coursera course about neural networks.

Today I discovered for myself reason why normalization and scaling in neural networks provides faster learning. Everything is related with error surface and optimization. If to put simply

the task of neural network is to find a global minimub at error surface. Algorithms of study of neural networks gradually move at error surface in order to finally find global minima of error

surface. Going to global minima in the circle will go faster then going to global minima in some ellipse or other kind of error surface.

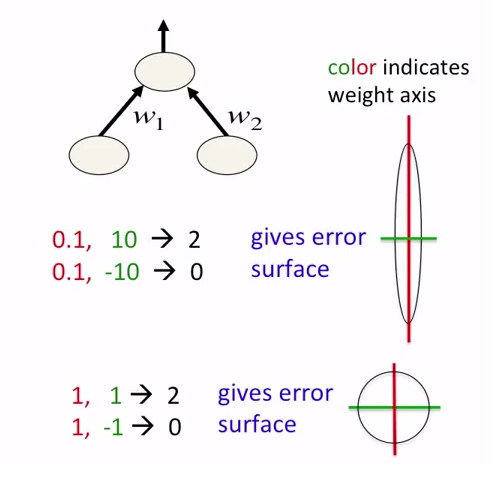

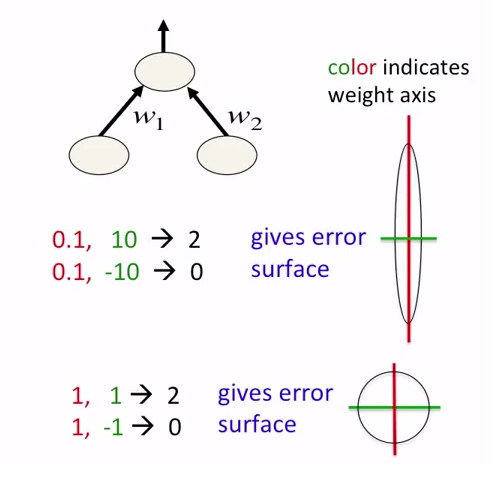

Suppose we have training for neural network with two samples:

101,101 - > 2

101, 99 - > 0

Then error surface will be oval, and convergence will be relatively slow. But if to normalize data from range [99 ; 101] to range [-1; 0 ] we will gt error surface as circle, which converges much more faster.

See the picture:

The same is true for case of scaling. Let's say we have two inputs, and two outputs:

0.1, 10 -> 2

0.1, -10 -> 0.

If to scale the second part to the following look how error surface are changed:

Ready to optimize your business processes with Acumatica? Just like normalization and scaling streamline neural network learning, customizing Acumatica can accelerate your business growth and efficiency. Whether you need tailored solutions or specific integrations, our team is here to help you achieve your goals faster. Leave a customization request today and let’s transform your Acumatica experience into a seamless, high-performing solution for your unique needs!