Backpropogation Encog

30 January 2015

Here is Backpropogation algorithm declaration of Encog:

var train = new Backpropogation(network, trainingSet, learningRate, momentum);

Today I discovered for myself purpose of momentum paramether.

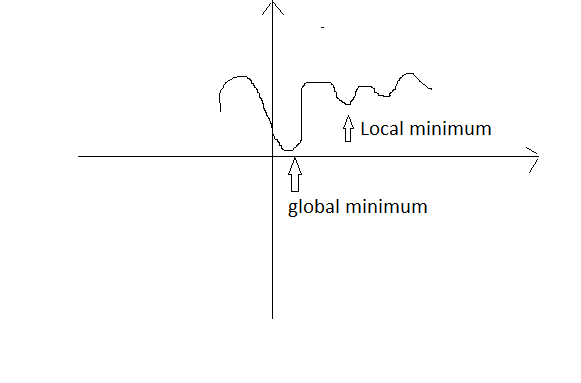

Here we have error function with global minimum and three local minimums. In order to jump out of local minima and run into global minima, neural network can take into account previous modification of weights. Momentum is coeficient, which manages which part of previous iteration take into account. If it is 1, then previous result will be taken into account completely. If it is 0, then previous update will be ignored.